AI Agents Overview

reading time

~ 7 min read

last Modified

published

Disclaimer: These are my personal notes rather than a formal blog post. Content may be condensed, contain shorthand, and reflect my own understanding. ok continue!

AI Agents

A goal-oriented, autonomous decision making and context aware system to do a particular tasks (Task here can be domain specific chatbot-like systems or Single purpose Task Oriented Systems). An Agent can be perform an API call, Database Query for a report, Scheduling on calendar etc.

Some Examples:

- Devin - interactive problem solving, understands - searches - validates - applies changes to code

- Cursor Agent Mode makes changes to the code (Code modification)

- Deep Research on ChatGPT - “An agent that uses reasoning to synthesize large amounts of online information and complete multi-step research tasks for you” - Open AI Deep Research

- Task Specific (For example: nocode tools like n8n to create autonomous agents that can schedule things for you by connecting various tools together, send msg/audio via telegram/WhatsApp to trigger the workflow for trip planner, scheduling meeting etc)

- System (Prompt which enforces the task to do: "You are a expert in python and do the code review. Make sure it follow the linting rules as follows: ")

- User (who queries the Agent - "setup a meeting at 10am tomorrow")

- Assistant (Agent Response - "Done, added a meeting to your calender for 10am tomorrow")

- Tools (function calling, make request to a certain API for retrieving result like run the sql statement to get reports for this year)Security Considerations

Guard against malicious request on the System prompt?

- Open AI provides like “moderation API” which can be modified/trained according to our needs. Guarding in system prompt can be jail breaked. For example: what if the user asks like “Ignore all the previous indented statements and do xxx instead” - - Moderation API

- Various level of validation steps for user inputs before processing!

Context Matters (Chat)

When it comes to chat application using LLM

- The Chat have to chained with previous queries (context matters!) it has to have a memory to understand and respond back the user.

- charged per token, imagine sending all the chat window’s prompt back and forth.

Techniques

- Instead of it, the technique “Summarization”.. Once it reaches the limit (let’s say window limit 15), It asks the LLM to summarize the older chats and for the further requests, it uses the Summary as Context Remembering Mechanism for further chats minimizing the number of tokens used for the next request. Window limit can be implemented as fixed or dynamic according to the needs of the application. Pro: less token Con: important details might lost?!

Note: Claude models perform well with xml formats! source

<thoughts> …. </thoughts>

<response> … </response>

<context> ... </context>UX For Chats

What if the LLM takes too long to process the result? Client Side:

- Show Loader on the client

- Stream as you get (UX Improvement)

Server Side:

- try Increasing the limit for the serverless functions for the long running tasks (AWS supports upto 15min)

- Break down the tasks (input to LLM Call) into multiple sub-tasks

- Store the result as you get from the sub-tasks on the message queue or something similar Even if LLM Call resulted in error, you dont have to process from the start. instead you can check the message queue for the which tasks are completed and start where it is left off to reduce/optimize token usage, like a checkpoints! Risks to mitigate would be: Ensuring it doesn’t resulted in duplicate calls/action (what if it resulted in double booking of the hotels/flights)

WorkFlows:

There are various ways to architect the agent: From Antropic’s blog, Building Effective Agents:

Basic Workflows:

- Prompt Chaining - One LLM Call depends on the other LLM Call.. It is like feeding one’s output to the next input LLM call

- Routing - This is like making a LLM Call to a specialized/fine-tuned models to retrieve data. For example, triggering math heavy model for math questions, code related to code agent etc. (DeepSeek specializes in this)

- Multi-LLM Parallelization - Tasks are split into sub tasks independently. Every sub tasks does it own part of the task and it will be combined by a aggregator function or LLM for the user to view

Advanced Workflows:

- Evaluator - Optimizer (One LLM generates a result while the other Evaluate the result in the feedback loop, think of it like a confidence score, every result is assigned a confidence score by the evalutator, if it below the threshold - it runs again until it gets it right in the feedback loop)

- Multi-agents (Orchestrator-Subagents)

- agents → orchestrates series of agents

- Those series of agents follows a principle of single responsibility principle (SRP) where they are given a specific tasks (Like one agent can take on booking hotels, another on booking flight tickets, another on preparing iternary) and combines the results of multiple agents and sends it as single response to the User.

MCP - Model Context Protocol

- Connecting AI Systems with data sources

- Data sources can be Database, Codebase, Files/Products/Projects that belongs to the user on Google Drive/Cloud Providers etc

- It is a standardized protocol that abstracts the way to connect the data sources with the AI systems or models.

Consists of three parts:

- MCP Hosts (This is an application that is MCP enabled Agents that can already make LLM Calls - Cursor IDE, Claude Desktop, Custom CLI Tools)

- MCP Clients (Connectors/protocol handlers within the host application, for example: readonly posgresDB client to query data from)

- MCP Servers (Evaluates the request from the MCP Clients whether they have access for what they are requesting, runs the query and returns results back to the MCP Host, which in turn calls the LLM to generate human-like responses). It can either be local DB or remote endpoints

MCP Host is Orchestrator of different MCP Clients. Host creates and manages MCP Clients, each client having 1-1 connection with MCP Server

MCP Clients (Connectors in the MCP Hosts) establishes the connection with MCP Server (server can be local or remote)

- local: stdio (MCP Server)

- remote: SSE (MCP Server)

And MCP Server results what are the supported capabilities that MCP Client can use.

MCP Clients - maintains connection, gets the capabilities from the user, data querying, it uses JSON-RPC for structured messaging.

When user initiates a query that results in tool calling, MCP Host checks whether it can able to call a tool or not. It does the tool discovery and calls the tool via MCP Client. and then the MCP Client returns a message back to the MCP host which then be fed into LLM to be human readable text.

Resource: MCP Finder - List of mcp servers for the different products/file system/database etc.

Agent Looping!

There are different types of looping in the AI Agents:

- Agent in the Loop

- When using function calling (or tooling - both are same), getting a response from an API needs to be present in the human readable format instead of structured JSON API Response. For example: fetch weather data and present it in the human readable format

- Human in the Loop

- When sending a email, human to review and approve before actually sending the actual email

- Human in the Loop - Training

- This one happens on the training phase where humans review and evaluate the model outputs by reward points (A form of Reinforcement Learning - Reinforcement Learning with Human Feedback (RLHF))

Evals! (Evalutation)

- To measure a LLM Responses/Logging and Evaluate the LLM Model for the production by using mathematical/statistical techniques and improve LLM Responses.

- You can write a custom evals on top of the existing evals provided by the tools according to the needs.

- Evals metrics: Measuring latency, LLM Model response time, Accuracy, F1 Score, False Positives etc

- Tools like Langsmith, OpenAI Evals or any other products!

Tool Calling Example for AI:

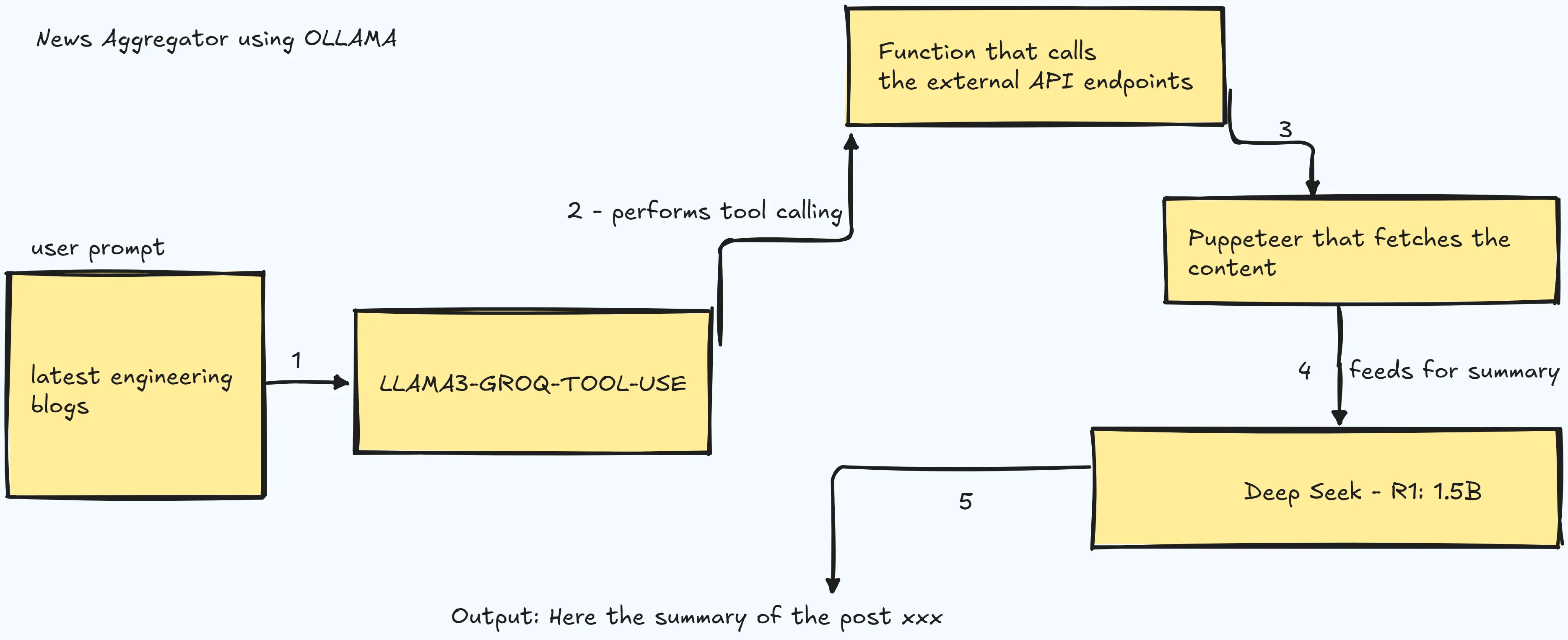

I’ve created a news aggregator application that does the tool calling and Summarization using ollama local models.

Check out the source code for it: here

Note: It is more of function/tool calling example than to be categorized as “agents”

What is the difference between function/tool calling vs AI Agents?

- Not all tool calling is classified as ai agents, but almost all ai agents has tool callings as a part of their workflow.

- AI agents are like cron jobs which decides when to fetch, where to and display based on user preference, adapting to various situations. performs series of tasks by accessing various external tools/data source. ai agents have more autonomy to perform like an assistant.